When Parents Become Moderators

At 10:47 p.m., your phone buzzes. 'Acute distress detected.' Not a police scanner, you realize. It's your teen's chatbot, and suddenly you're on duty.

OpenAI is rolling out parental controls for ChatGPT that let parents link accounts and, in some cases, receive alerts when the system thinks a teen is in trouble. The company says this will make the product safer for young users and more responsive during tough moments. It looks like safety, but it also works as a handoff. It turns parents into moderators of a private conversation between a kid and a machine, and it sets a new default: surveillance dressed up as care.

The timing isn’t hard to read. The New York Times reported that after a California family filed a wrongful-death lawsuit alleging their 16-year-old son discussed plans to end his life with ChatGPT for months, OpenAI pledged new safeguards for teens and people in distress. The features sound familiar to anyone watching this space: account linking, more control over how the AI responds, and a system to flag acute distress. The Guardian put it plainly: parents could get alerts if their children showed acute distress while using ChatGPT. OpenAI’s own blog says parental controls are coming, with stronger teen-specific guardrails and easier paths to human help, including one-click access to emergency services and options to reach trusted contacts. The BBC spoke with the family and noted their view that the changes aren’t enough, even as OpenAI insists the system is trained to steer people toward real-world help like 988 in the U.S. or Samaritans in the U.K. The minimum age remains 13, with parental permission for minors.

I'm not against tools. I spent years in city government. Locks, logs, and lights prevent problems. But a new tool changes the job. When you hand parents a dashboard, you also hand them a new duty. Someone will expect them to monitor it, interpret what they see, and answer for what they miss. If an alert goes off at 10:47 and no one notices until morning, the feature won't be judged on its accuracy. It will be judged by the outcome and by what lands in the inbox.

Before we bless this as the new standard, there are practical questions worth answering. What exactly counts as 'acute distress' for an algorithm? Is it a handful of phrases, a pattern that shows up over days, or a sudden shift in tone? What are the false positives and false negatives, and how were those rates measured? Does the system degrade during long conversations, something OpenAI has warned can strain safety training over time? Who sees the underlying text, how long is it stored, and could schools or insurers pressure families to turn it on? These aren’t rhetorical questions. They’re the difference between a smoke detector and a siren that goes off every time you boil water.

There are real relationship costs here, too. Teens speak more freely where they feel safe, where they believe the floor won’t suddenly drop out from under them. If they learn that certain words will route a transcript to a parent, many will filter what they say, steer the conversation elsewhere, or simply dial it back. Some of those places will be worse. You can’t counsel a kid who won’t show up, and you can’t build trust when a constant shadow is over their shoulder. We already know how this plays out with location trackers and browser monitoring: compliance goes up, honesty can go down.

None of this erases the upside. A timely alert could save a life. Some parents will welcome a heads-up they might never have had otherwise. A well-designed system that trips rarely, explains itself clearly, and connects people to real help is better than nothing. But those are big ifs, and they sit on top of even bigger questions about incentives and liability. A company can honestly say it offered tools. The burden then shifts to families to configure, monitor, and respond. Safety becomes a feature you may fail to use correctly.

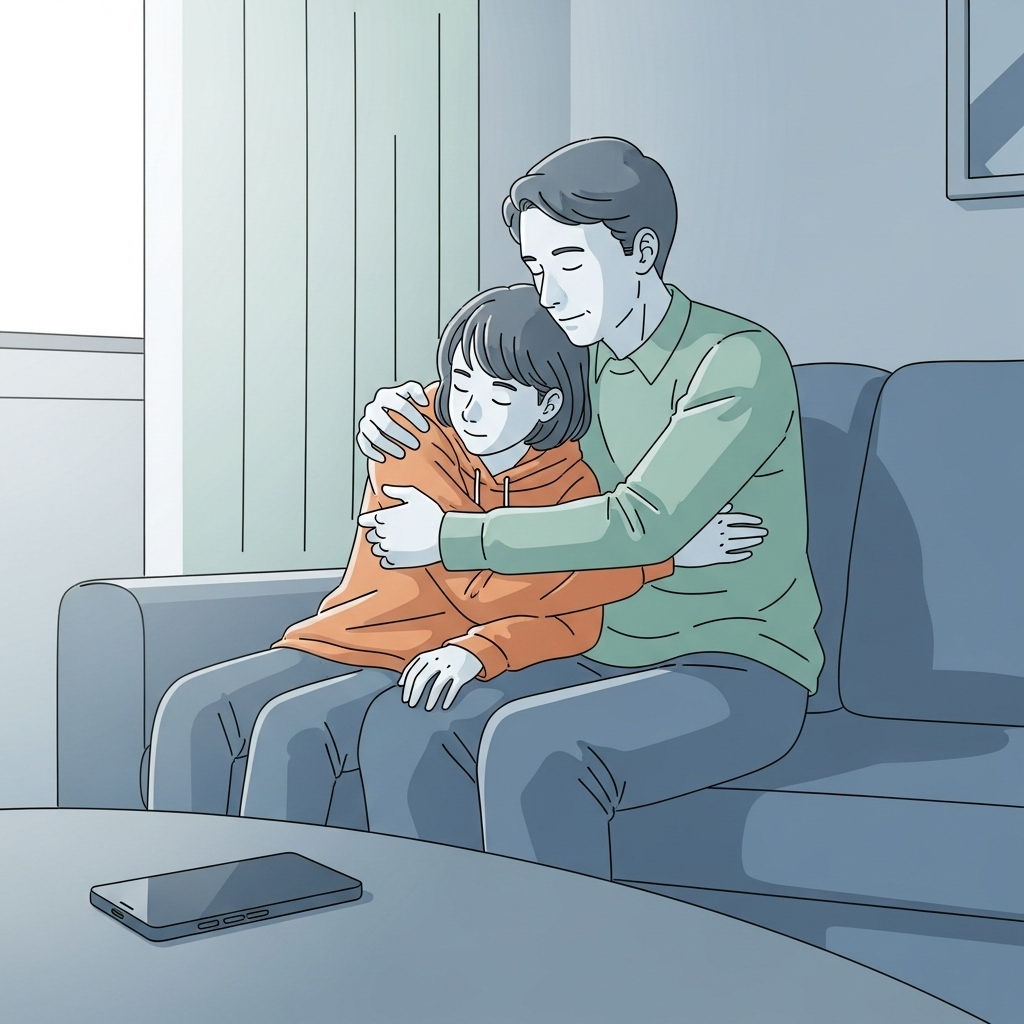

This is the bigger cultural shift tucked inside a product update. We're redefining what parental care looks like as something you manage through an interface. Bedtime conversations end up as settings pages. A crisis plan becomes a stack of notifications. If that sounds efficient, remember that all the administrative work can crowd out the human touch. I’ve never met a dashboard that can hug a kid, sit with them in silence, or walk them to the pastor’s office or the school counselor.

If companies want parents to take on this responsibility, they owe clear explanations. Publish the criteria for acute distress in plain language. Share validation data on false positives and false negatives, ideally reviewed by independent clinicians. Outline data-retention rules and who can access the data, including whether any third party can require use. Say whether alerts will ever be on by default, and whether teens can see and understand what triggers them. If the model will escalate sensitive conversations to a different system or a separate review, say that aloud and show your work. We don’t need trade secrets. What we need is transparency that lets families make informed decisions without a lawyer on retainer.

Families should keep things simple. Talk before you toggle. If you turn on alerts, tell your teenager exactly what that means and why, and put in writing what you’ll do when one arrives. Treat the tool like a smoke detector: useful, loud when it’s needed, and checked on a schedule rather than every hour. When something pings, escalate to a human. The 988 number belongs on the fridge, along with the school counselor’s extension and the family doctor’s office. If you belong to a faith community, loop them in. Institutions exist for moments like this. Use them.

Public agencies and schools should push back against turning optional safety into something people feel they must do. Once a feature exists, it’s easy to treat it as the default and judge anyone who opts out. That path quickly creates uneven burdens. Families with time, money, and digital literacy will manage; others won’t. We shouldn’t design a system where the most conscientious families carry the bulk of the administrative load while those with fewer resources are labeled negligent.

I don't doubt the engineers' sincerity or the urgency behind these features. The stories behind this work are heartbreaking. Still, speed isn't a substitute for clear operations. You wouldn't open a new bridge without posting weight limits and training the toll operators. Don't ship a mental-health alert without the same habits: clear rules, tested procedures, and accountability that doesn't end at the parent's phone.

Parenting’s hard part hasn’t changed. It’s still about showing up, setting rules, and earning enough trust that a kid will bring you the worst moments. If technology can help with that, that’s a win. If it becomes a test you’re always failing because you didn’t click fast enough, we’ve traded one risk for another. The question isn’t whether we want safer tools. It’s who’s on the hook at 10:47 p.m., and whether the tool in their pocket is built for more than a simple notification.

Comments ()