Who Owns the AI Rulebook Now?

If you want to understand how a system actually works, pay attention to who gets to say no. That's where the real power lies.

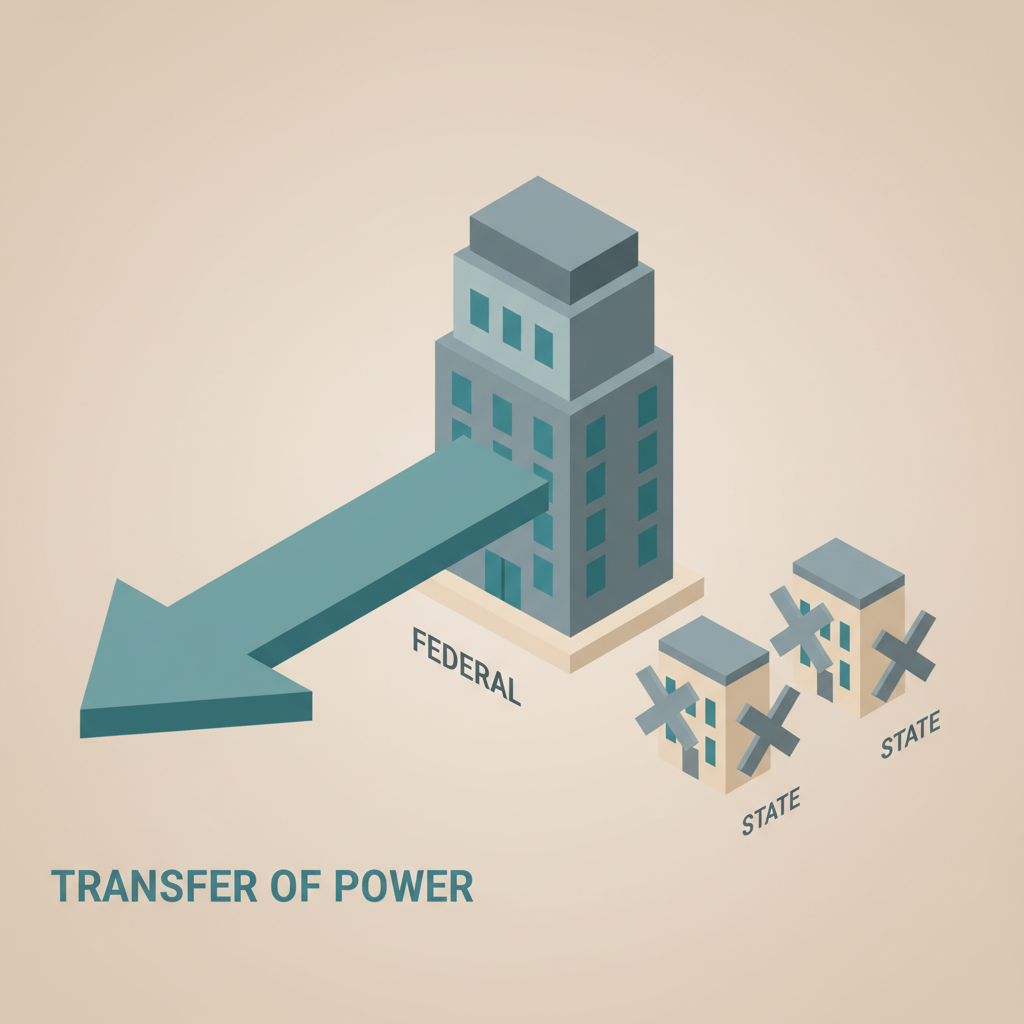

Trump's new executive order on AI is really about shifting who gets to say no. On the surface it claims to prevent a patchwork of state rules that could "stifle innovation." In practice it's more blunt: it strips veto power from states, concentrates that authority in Washington, and tilts the field toward the largest AI vendors.

Trump’s executive order on AI is basically about shifting who gets to say no. Officially it claims to prevent a patchwork of state rules that might “stifle innovation.” In practice, Bloomberg says the order does three things: it tells federal agencies to pursue a “minimally burdensome” national standard, instructs the Justice Department to challenge state AI laws in court, and threatens to withhold federal funding from states that don’t fall in line. NDTV’s summary hits the same notes — discouraging state disclosure requirements, pushing back on algorithmic-bias rules, and opposing broad “catastrophic risk” controls the federal government isn’t ready to police.

Take away the partisan labels and what you have is a plain transfer of power. Before the order, AI regulation was messy but spread out: Congress stalled, federal agencies issued guidance and a few narrow rules, and states like California and Colorado began filling the gap with laws on transparency, discrimination and safety. It was inefficient, yes, but it also meant several actors could still say no when an automated decision crossed a line.

They're trying to flip the board. The message is simple: Washington wants to set the baseline. At the same time, the administration is leaning on federal dollars and the threat of lawsuits to discourage states from going further. Creating an AI litigation unit inside the Justice Department is no small matter; it signals that a state law which seriously irritates industry could face more than lobbying. It could be challenged in court by the federal government.

That's what fragmentation looks like when you pretend you're solving fragmentation. In practice the rules overlap: a White House deregulatory stance that can be reversed by the next administration; state laws that keep getting passed but then live under constant legal and funding pressure; and a federal legislative gap, with no clear statute to settle preemption. In boardroom terms, it's the old line — everyone owns it, so no one really does.

From an accountability point of view, this is poisonous. If an AI system wrecks someone's credit, denies a medical claim, or causes a wrongful arrest, people will ask who was supposed to be watching. Under the order you end up with a circle of blame. States will say, "We tried to regulate, but the DOJ threatened us." Federal agencies will reply, "We followed the executive order; Congress never told us to do more." Companies will point to compliance with a weak federal baseline and whatever state rules survived litigation. Everyone has an explanation, and the person harmed is left with the mess.

Supporters say one national standard beats fifty conflicting ones. That rings true in part: I’ve sat through strategy sessions where we sketched twenty jurisdictions on a whiteboard and debated whether to ship a product at all. Chasing a tangle of rules becomes an innovation tax. But centralization only helps when the national standard is both clear and credible. Right now the federal rulebook on AI is neither, and preempting state rules to create a void does not give you the benefits of a strong national floor.

There are real legal limits to how far this order can go. Members of Congress, including Republican Ted Lieu, have already called it unconstitutional on basic federalism grounds, and they have a point. An executive order cannot unilaterally erase state police powers. Conditional spending has guardrails. Litigation is slow and its results are mixed. So the order looks less like a tidy preemption and more like a long campaign to chill state initiative.

If state officials still care about meaningful oversight, they should start with the levers this order can’t easily touch; those are the places where they can still make a difference.

Start with procurement. States and cities buy a lot of software and services, so contracts are a natural place to lock in safeguards: audit rights, clear disclosure of training data, regular bias testing and a real human override. You don't need new laws to write a tougher RFP. Plainly put, "If you want our money, your system has to be inspectable."

Second, rely on sectoral enforcement. Many state attorneys general already have powers under consumer-protection, civil-rights, insurance or banking laws. Those statutes don’t care whether the harm came from a human clerk or an algorithm. If an AI system produces discriminatory outcomes or deceptive practices, AGs can still take companies to court on existing legal grounds, even if a state’s bespoke “AI Act” is tied up in federal litigation.

Third, coalition building. States that want to keep control should coordinate their rules. Imagine California, New York and Illinois, along with a few others, agreeing on a set of basic audit and disclosure standards and then applying them consistently. Vendors will adjust — markets follow the largest buyers.

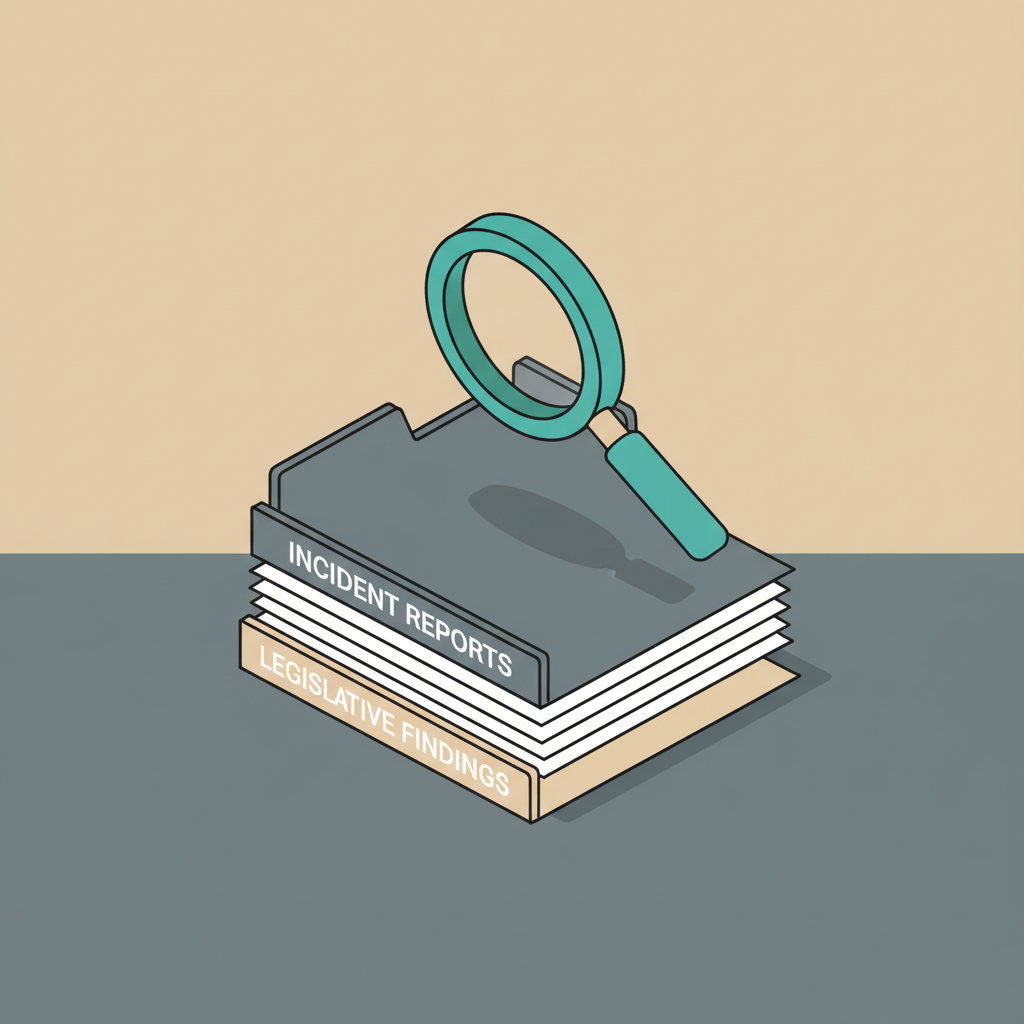

Finally, the unglamorous but vital work is record-keeping. If the Justice Department challenges a state AI rule as overreach, a court will look for hard evidence: legislative findings, incident reports, enforcement history. When state agencies and civil-society groups methodically document real harms from automated systems, they build the factual record that can justify stricter oversight. That documentation matters when judges decide whether a state law is a legitimate exercise of police power or a speculative overstep.

None of this is glamorous. It's more like routine upkeep. The executive order is just one move in a long, drawn-out contest over who gets to oversee the systems that are quietly making ever more consequential decisions. This isn't a fight about sci-fi utopias or existential doom. It's a practical question: does any public actor, at any level, still have the authority to say “no” when an opaque model starts shaping people’s lives?

If you run a state agency, a city, or a large public institution, think of the order as a set of limits rather than a final judgment. Figure out which levers are off-limits and which you can still use, then assign responsibility for the remaining ones. Authority over automated systems, much like in older bureaucracies, tends to land with whoever is willing and able to draw a clear line and enforce it.

Sources

- https://www.ndtvprofit.com/business/trump-signs-order-seeking-to-limit-state-level-ai-regulation

- https://news.bgov.com/artificial-intelligence/trump-signs-order-seeking-to-limit-state-level-ai-regulation

- https://www.govtech.com/artificial-intelligence/trump-signs-executive-order-reining-in-state-ai-regulation

- https://www.littler.com/news-analysis/asap/president-signs-executive-order-limit-state-regulation-artificial-intelligence

- https://www.squirepattonboggs.com/en/insights/publications/2025/02/key-insights-on-president-trumps-new-ai-executive-order-and-policy-regulatory-implications

Comments ()